代码规范

程序员最讨厌的四件事:写注释、写文档、别人不写注释、别人不写文档~

1. 格式规范

1.1 代码规范标准

1.1.1 PEP 8 —— Python 官方代码规范

Python 官方的代码风格指南,包含了以下几个方面的内容:

代码布局:介绍了 Python 中空行、断行以及导入相关的代码风格规范。比如一个常见的问题:当我的代码较长,无法在一行写下时,何处可以断行?

表达式:介绍了 Python 中表达式空格相关的一些风格规范。

尾随逗号相关的规范:当列表较长,无法一行写下而写成如下逐行列表时,推荐在末项后加逗号,从而便于追加选项、版本控制等。

# Correct:

FILES = ['setup.cfg', 'tox.ini']

# Correct:

FILES = [

'setup.cfg',

'tox.ini',

]

# Wrong:

FILES = ['setup.cfg', 'tox.ini',]

# Wrong:

FILES = [

'setup.cfg',

'tox.ini'

]命名相关规范、注释相关规范、类型注解相关规范:我们将在后续章节中做详细介绍。

A style guide is about consistency. Consistency with this style guide is important. Consistency within a project is more important. Consistency within one module or function is the most important.

PEP 8 -- Style Guide for Python Code

一个需要注意的地方是,PEP 8 的代码规范并不是绝对的,项目内的一致性要优先于 PEP 8 的规范。OpenMMLab 各个项目都在 setup.cfg 设定了一些代码规范的设置,请遵照这些设置。一个例子是在 PEP 8 中有如下一个例子:

这一规范是为了指示不同优先级,但 OpenMMLab 的设置中通常没有启用 yapf 的 ARITHMETIC_PRECEDENCE_INDICATION 选项,因而格式规范工具不会按照推荐样式格式化,以设置为准。

1.1.2 Google 开源项目风格指南

Google 使用的编程风格指南,包括了 Python 相关的章节。相较于 PEP 8,该指南提供了更为详尽的代码指南。该指南包括了语言规范和风格规范两个部分。

其中,语言规范对 Python 中很多语言特性进行了优缺点的分析,并给出了使用指导意见,如异常、Lambda 表达式、列表推导式、metaclass 等。 风格规范的内容与 PEP 8 较为接近,大部分约定建立在 PEP 8 的基础上,也有一些更为详细的约定,如函数长度、TODO 注释、文件与 socket 对象的访问等。

推荐将该指南作为参考进行开发,但不必严格遵照,一来该指南存在一些 Python 2 兼容需求,例如指南中要求所有无基类的类应当显式地继承 Object, 而在仅使用 Python 3 的环境中,这一要求是不必要的,依本项目中的惯例即可。二来 OpenMMLab 的项目作为框架级的开源软件,不必对一些高级技巧过于避讳,尤其是 MMCV。但尝试使用这些技巧前应当认真考虑是否真的有必要,并寻求其他开发人员的广泛评估。

另外需要注意的一处规范是关于包的导入,在该指南中,要求导入本地包时必须使用路径全称,且导入的每一个模块都应当单独成行,通常这是不必要的,而且也不符合目前项目的开发惯例,此处进行如下约定:

1.2 格式规范工具

1.2.1 flake8

Flake8 是 Python 官方发布的一款 Python 代码规范检测工具,是以下三种工具的一个封装:

PyFlakes:该工具用以检查 Python 代码是否存在简单的逻辑错误,如某一个变量没有被使用,某一部分代码无法触达等。

pycodestyle: 该工具用以检查 Python 代码是否符合 PEP 8 规定的代码风格。

Ned Batchelder's McCabe script:该工具用以分析 Python 的圈复杂度,圈复杂度通常用以衡量代码的逻辑结构是否过于复杂难以维护,flake8 中默认不会启用相关检查。

1.2.2 yapf

Yapf 是另一款代码规范检测工具,由 Google 进行开发维护,作为 flake8 的补充,会在 OpenMMLab 各项目开发的 pre-commit hook 中执行。

1.2.3 isort

用以自动调整 Python 导入包顺序的工具,会将导入的包分为 system、third-party、local 三部分,以保证结构清晰。

1.2.4 Pre-commit

Pre-commit 利用了 Git 的 hook 机制,将以上工具以及一些其他的检查工具集成在一起,并且在每次 Git 提交时自动执行这些代码检查工具,没有错误才可继续提交,从而最低限度地保证代码质量。

安装 pre-commit 并初始化的相关操作,可以参考 Contributing to OpenMMLab ‒ mmclassification documentation

2. 命名规范

2.1 命名规范的重要性

优秀的命名是良好代码可读的基础。基础的命名规范对各类变量的命名做了要求,使读者可以方便地根据代码名了解变量是一个类 / 局部变量 / 全局变量等。而优秀的命名则需要代码作者对于变量的功能有清晰的认识,以及良好的表达能力,从而使读者根据名称就能了解其含义,甚至帮助了解该段代码的功能。

2.2 基础命名规范

Type

公有

私有

Note

模块

lower_with_under

_lower_with_under

包

lower_with_under

类

CapWords

_CapWords

异常

CapWordsError

Functions

lower_with_under()

_lower_with_under()

全局 / 类内常量

CAPS_WITH_UNDER

_CAPS_WITH_UNDER

全局 / 类内变量

lower_with_under

_lower_with_under

实例变量

lower_with_under

_lower_with_under (protected)__lower_with_under (private)

此处的 private 不止是命名规范,而是会触发 Python 的更名机制,实际项目中自定义变量名不常用到

方法

lower_with_under()

_lower_with_under() (protected) __lower_with_under() (private)

局部变量

lower_with_under

函数 / 方法参数

lower_with_under

Tips:

尽量避免变量名与保留字冲突,特殊情况下如不可避免,可使用一个后置下划线,如

class_尽量不要使用过于简单的命名,除了约定俗成的循环变量

i,文件变量f,错误变量e等。不会被用到的变量可以命名为

_,逻辑检查器会将其忽略。

2.3 命名技巧

良好的变量命名需要保证三点:1. 含义准确,没有歧义;2. 长短适中;3. 前后统一

常见的函数命名方法:

动宾命名法:

crop_img,init_weights动宾倒置命名法:

imread,bbox_flip

注意函数命名与参数的顺序,保证主语在前,符合语言习惯:

check_keys_exist(key, container)check_keys_contain(container, key)

注意避免非常规或统一约定的缩写,如 nb -> num_blocks,in_nc -> in_channels

3. Docstring 规范

3.1 为什么要写 Docstring

Docstring 是对一个类、一个函数功能与 API 接口的详细描述,有两个功能,一是帮助其他开发者了解代码功能,方便 debug 和复用代码;二是在 Readthedocs 文档中自动生成相关的 API reference 文档,帮助不了解源代码的社区用户使用相关功能。

3.2 如何写 Docstring

与注释不同,一份规范的 docstring 有着严格的格式要求,以便于 Python 解释器以及 sphinx 进行文档解析,详细的 docstring 约定参见 PEP 257。此处以例子的形式介绍各种文档的标准格式,参考格式为 Google 风格。

3.2.1 模块文档

代码风格规范推荐为每一个模块(即 Python 文件)编写一个 docstring,但目前 OpenMMLab 项目大部分没有此类 docstring,因此不做硬性要求。

3.2.2 类文档

类文档是我们最常需要编写的,此处,按照 OpenMMLab 的惯例,我们使用了与 Google 风格不同的写法。如下例所示,文档中没有使用 Attributes 描述类属性,而是使用 Args 描述 __init__ 函数的参数。在 Args 中,遵照parameter (type): Description.的格式,描述每一个参数类型和功能。其中,多种类型可使用 (float | str)的写法,可以为 None 的参数可以写为(int | None)或(int, optional)。

注意 ``here``、`here`、"here" 三种引号功能是不同在 reStructured 语法中,``here`` 表示一段代码;`here` 表示斜体;"here" 无特殊含义,一般可用来表示字符串。其中 `here` 的用法与 Markdown 中不同,需要多加留意。另外还有 :obj:`type` 这种更规范的表示类的写法,但鉴于长度,不做特别要求,一般仅用于表示非常用类型。另外,在一些算法实现的主体类中,建议加入原论文的链接;如果参考了其他开源代码的实现,则应加入modified from,而如果是直接复制了其他代码库的实现,则应加入 copied from ,并注意源码的 License。如有必要,也可以通过 .. math:: 来加入数学公式

3.2.3 方法(函数)文档

函数文档与类文档的结构基本一致,但需要加入返回值文档。对于较为复杂的函数和类,可以使用 Examples 字段加入示例;如果需要对参数加入一些较长的备注,可以加入 Note 字段进行说明。对于使用较为复杂的类或函数,比起看大段大段的说明文字和参数文档,添加合适的示例更能帮助用户迅速了解其用法。需要注意的是,这些示例最好是能够直接在 Python 交互式环境中运行的,并给出一些相对应的结果。如果存在多个示例,可以使用注释简单说明每段示例,也能起到分隔作用。

为了生成 readthedocs 文档,文档的编写需要按照 ReStructrued 文档格式,否则会产生文档渲染错误,在提交 PR 前,最好生成并预览一下文档效果。语法规范参考:

如果函数接口在某个版本发生了变化,需要在 docstring 中加入相关的说明,必要时添加 Note 或者 Warning 进行说明,例如:

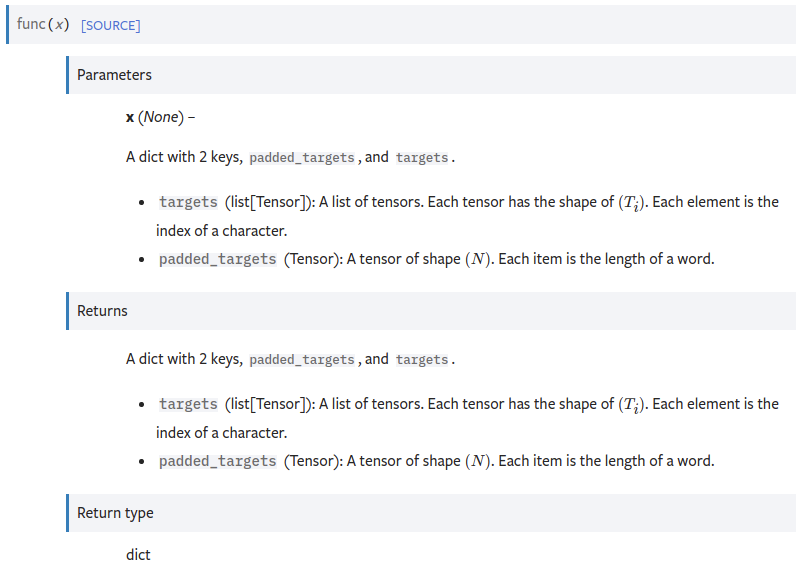

如果参数或返回值里带有需要展开描述字段的 dict,则应该采用如下格式:

渲染效果如下:

关于竖线(“|”)在 restructtredtext 中的应用,请参阅:https://github.com/sphinx-doc/sphinx/issues/3778 rst Cheatsheet - Material for Sphinx (搜索 vertical bar)

已知这种写法与 VSCode 对 docstring 的渲染方法不太兼容,请谨慎使用。

4. 注释规范

4.1 为什么写注释

对于一个开源项目,团队合作以及社区之间的合作是必不可少的,因而尤其要重视合理的注释。不写注释的代码,很有可能过几个月自己也难以理解,造成额外的阅读和修改成本。

4.2 如何写注释

最需要写注释的是代码中那些技巧性的部分。如果你在下次 代码审查 的时候必须解释一下, 那么你应该现在就给它写注释. 对于复杂的操作, 应该在其操作开始前写上若干行注释. 对于不是一目了然的代码, 应在其行尾添加注释.

—— Google 开源项目风格指南

为了提高可读性, 注释应该至少离开代码2个空格.

另一方面, 绝不要描述代码. 假设阅读代码的人比你更懂Python, 他只是不知道你的代码要做什么.

—— Google 开源项目风格指南

在注释中,可以使用 Markdown 语法,因为开发人员通常熟悉 Markdown 语法,这样可以便于交流理解,如可使用单反引号表示代码和变量(注意不要和 docstring 中的 ReStructured 语法混淆)

4.3 注释示例

出自

mmcv/utils/registry.py,对于较为复杂的逻辑结构,通过注释,明确了优先级关系。

出自

mmcv/runner/checkpoint.py,对于 bug 修复中的一些特殊处理,可以附带相关的 issue 链接,帮助其他人了解 bug 背景。

5. 类型注解

5.1 为什么要写类型注解

类型注解是对函数中变量的类型做限定或提示,为代码的安全性提供保障、增强代码的可读性、避免出现类型相关的错误。

Python 没有对类型做强制限制,类型注解只起到一个提示作用,通常你的 IDE 会解析这些类型注解,然后在你调用相关代码时对类型做提示。另外也有类型注解检查工具,这些工具会根据类型注解,对代码中可能出现的问题进行检查,减少 bug 的出现。

需要注意的是,通常我们不需要注释模块中的所有函数

公共的 API 需要注释

在代码的安全性,清晰性和灵活性上进行权衡是否注释

对于容易出现类型相关的错误的代码进行注释

难以理解的代码请进行注释

若代码中的类型已经稳定,可以进行注释. 对于一份成熟的代码,多数情况下,即使注释了所有的函数,也不会丧失太多的灵活性.

—— Google 开源项目风格指南

5.2 如何写类型注解

函数 / 方法类型注解,通常不对

self和cls注释。

注:类型注解中的类型可以是 Python 内置类型,也可以是自定义类,还可以使用 Python 提供的 wrapper 类对类型注解进行装饰,一些常见的注解如下:

变量类型注解,一般用于难以直接推断其类型时:

泛型

上文中我们知道,typing 中提供了 list 和 dict 的泛型类型,那么我们自己是否可以定义类似的泛型呢?

使用上述方法,我们定义了一个拥有泛型能力的映射类,实际用法如下:

另外,我们也可以利用 TypeVar 在函数签名中指定联动的多个类型:

5.3 类型注解检查工具

mypy 是一个 Python 静态类型检查工具。根据你的类型注解,mypy 会检查传参、赋值等操作是否符合类型注解,从而避免可能出现的 bug。

例如如下的一个 Python 脚本文件 test.py:

运行 mypy test.py 可以得到如下检查结果,分别指出了第 4 行在函数调用和返回值赋值两处类型错误。而第 5 行同样存在两个类型错误,由于使用了 type: ignore 而被忽略了,只有部分特殊情况可能需要此类忽略。

6. 参考资料

[1] https://www.python.org/dev/peps/pep-0008/ [2] https://zh-google-styleguide.readthedocs.io/en/latest/google-python-styleguide/contents/ [3] https://realpython.com/python-pep8/ [4] https://docs.python.org/3/library/typing.html [5] https://mypy.readthedocs.io/en/stable/

最后更新于